- Home

- Contact information

- My publications

- Research projects:

- Toolkit for researchers:

- Students

I see 3D !

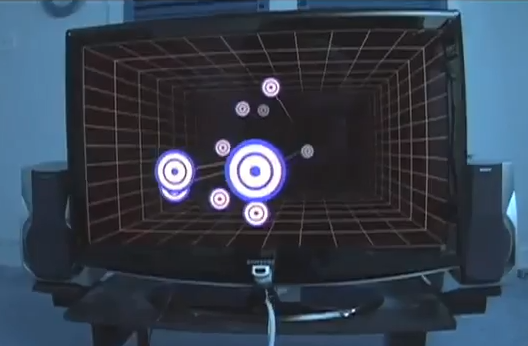

This page presents a non-intrusive system that gives the illusion of 3D. Using low-cost 2D projectors, we project images on the floor. The images are adapted to the viewpoint of the user in order to simulate a 3D virtual world all around him. To achieve this, we estimate in real-time the position of the head. The user can walk freely in the virtual world and interact with it himself, without any proxy such an avatar. There is no limit on the size of the virtual world, except the size of the room in which the system is installed, because multiple projects can be used in conjunction. Note that our system does not require the user to wear anything. For example, there is not need for glasses or markers.

Giving the illusion of 3D is the goal of trompe-l'oeils. Even if those paintings are on a 2D flat surface, we perceive them as 3D if we are at the right viewpoint. Our system does the same, but dynamically. The user's head is tracked and the image projected on the floor is computed according to its position. The perspective is always correct, wherever the user is.

How to cite this work ?

I-see-3D! An Interactive and Immersive System that dynamically adapts 2D projections to the location of a user’s eyesdownload the PDF file of the full paper

S. Piérard, V. Pierlot, A. Lejeune, and M. Van Droogenbroeck

International Conference on 3D Imaging (IC3D)

December 2012 - Liège, Belgium

Differences with related work

There already exist several systems that produce images adapted to the user's viewpoint. This is called “head-coupled perspective” or HCP in the scientific literature. An early system has been proposed by Carolina Cruz-Neira at SIGGRAPH 92. In that system the user is inside a cubic box that is named “cave”. An HCP image is presented on every surface, the walls, the floor, and the ceiling. In that system, the user needs to wear glasses for stereo-vision, and a marker to track the head. More recently, in 2008, Johnny Lee proposed a light version of cave without stereo-vision. His system is based on a television screen to display the HCP images, and a Nintendo Wii remote to track the head. Then, Jérémie Francone proposed something quite similar with smartphones. The user does not need anymore to wear a device since the head is tracked based on the built-in front-facing camera. That system also features interactivity. For example, the cube turns when the smartphone turn, as if the user was holding the cube directly.

A first thing to note about those systems is that the user cannot walk in the virtual world. In the system proposed by Carolina Cruz-Neira, the space in which the user can move is strongly constrained by the walls. And in the two other systems, the virtual world is not all around the user since he has to watch a limited screen. In contrast, our goal is to let the user walk freely in the virtual world.

A second thing to note is that in all those systems, the images are shown on rectangular surfaces. The surface plays the role of the image plane of a pinhole camera whose optical center is located at the head. Therefore, all one has to do is to simulate a pinhole camera with a perspective projection. In contrast, in our system, there is a deformation between the computed image and the one on the floor. We have to take into account the intrinsic and extrinsic parameters of the projectors. The projection is not perspective in our case.

How our system works

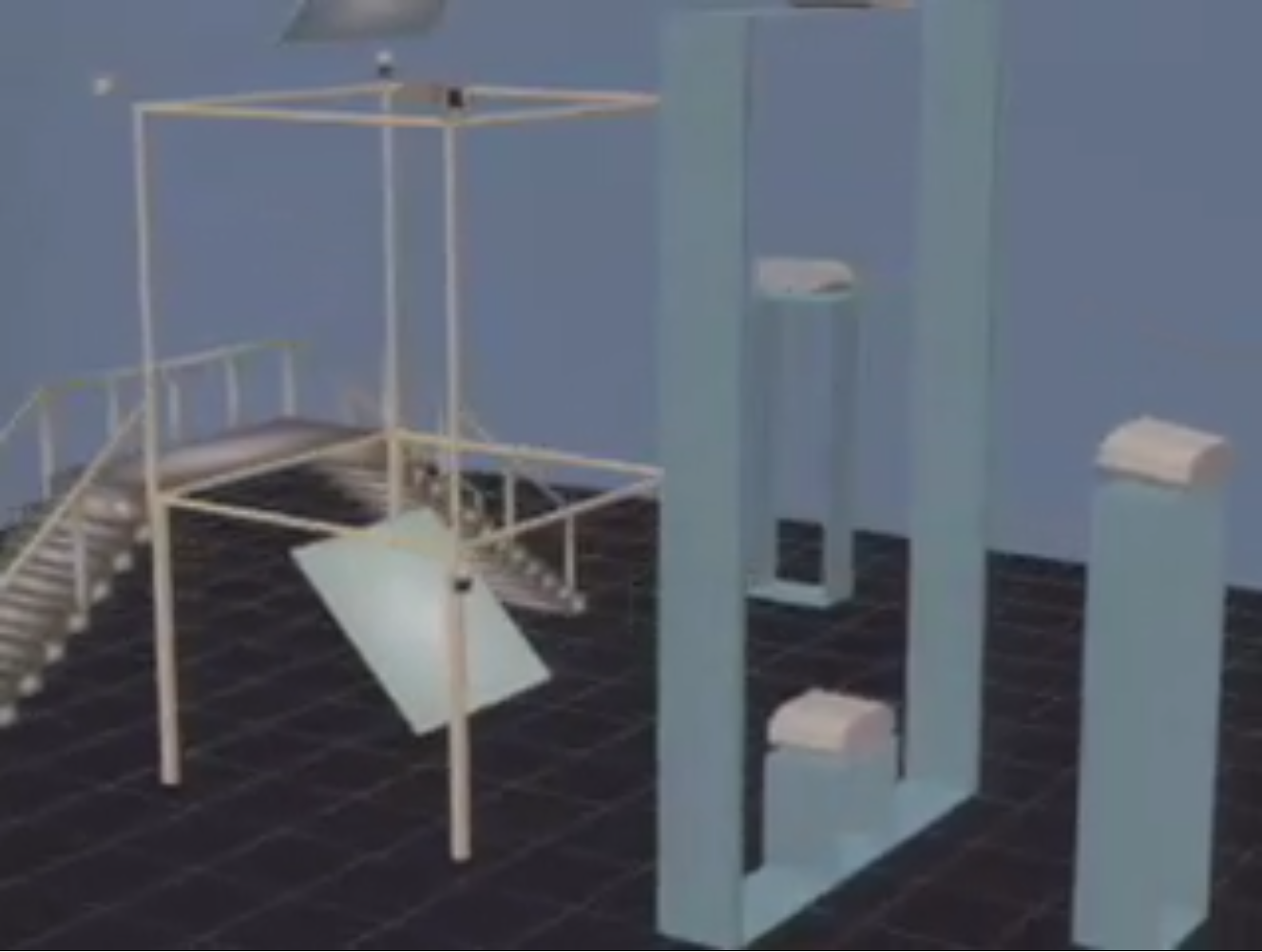

We use multiple sensors to estimate the head position. They behave perfectly in darkness. On the one hand, we use several kinects placed around the scene, and we recover the head position by pose recovery methods. But, in practice, it is difficult to cover a large zone with kinects because of the limited range in which the pose recovery method is able to provide a reliable estimation. Moreover, there is no way to ensure that the pose recovery method will succeed. Therefore, we use another source of information. We place range laser scanners at 15 cm above the floor and scan an horizontal plane with them. This permits us to locate the feet, and therefore to roughly estimate the head location. However, with these range laser scanners, it is impossible to know when the user crouches. Therefore, the kinects and the range laser scanners are complementary.

On each stream of depth images coming from a kinect, we apply a pose recovery algorithm that provides multiple head location hypothesis. The information issued from the range laser scanners is fused to form a unique point cloud. This one is further analyzed to provide multiple person location hypothesis. From all these hypothesis, we aim at estimating the head position. A validation gate is used in conjunction with a Kàlmàn filter. The validation gate rejects outliers, and select the most probable inlier for each source of information. The filter has been optimized in order to minimize the variance of the its output while keeping the bias in an acceptable range. The constant white noise acceleration model has been selected. Therefore, during the prediction step, the output of the Kalman filter is in uniform linear motion.

Once the head position has been estimated, we have to render an image accordingly. The projection is in two parts. A first matrix multiplication gives the coordinates of the point on the floor that should be illuminated, and a second matrix multiplication gives the coordinates of the corresponding pixel and its associated depth. The first matrix depends only on the head location, while the second one depends only on the projector intrinsic and extrinsic parameters. The matrices have been tuned in order to avoid problems related to the clipping mechanism present in 3D rendering pipelines such as OpenGL. You can find more information about this projection is our paper.

Our implementation is based on the libraries OpenNI and OpenGL. OpenNI is used to grab the depth images from the kinects and to apply a pose recovery method on them. Our system can be implemented without any shader, even if the projection is nether orthogonal, nor perspective. The method is accurate to the pixel since the images are rendered directly in the projector's image plane. We generate shadows with the classical algorithm named “Carmack's reverse”. The virtual lights are placed at the real light locations, including the projectors.

Our results

A first video taken from the user's viewpoint, with interactions

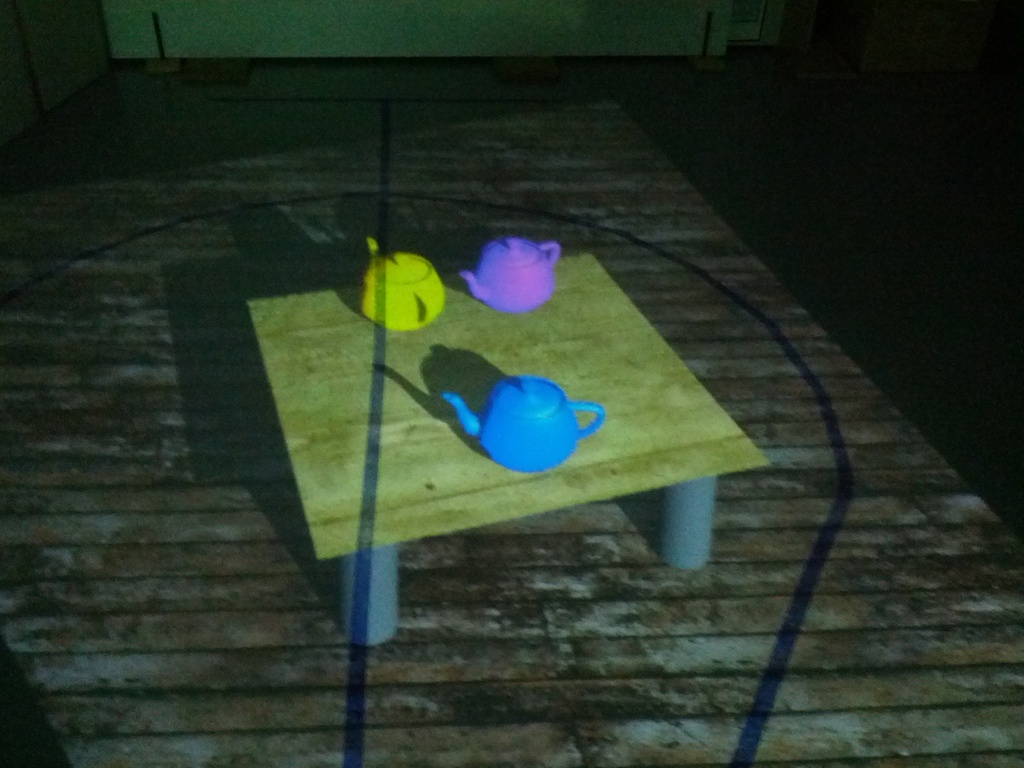

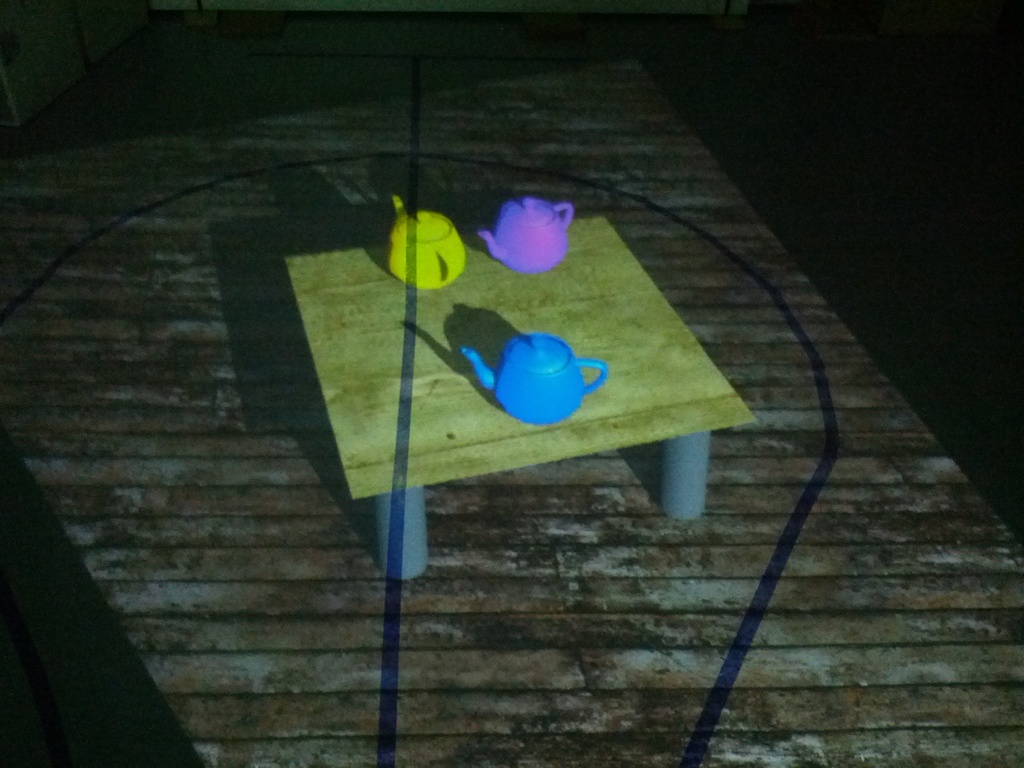

The first video has been taken in our lab with a smartphone located near the user's viewpoint. Three magic teapots are placed on a coffee table. When the user touches a teapot, its color changes. That's to show that interactivity can be easily added to our system. But of course that's not the only kind of interaction that can be implemented. In the current version of our project, there is some possibilities to improve the interactions, since the estimation of the hand positions is based on the pose recovery method bundled with OpenNI, which sometimes fails. Note that everything you see is virtual. There is no real teapot in our lab. There is no table, and there is no wood flooring.

Another video taken from an external viewpoint

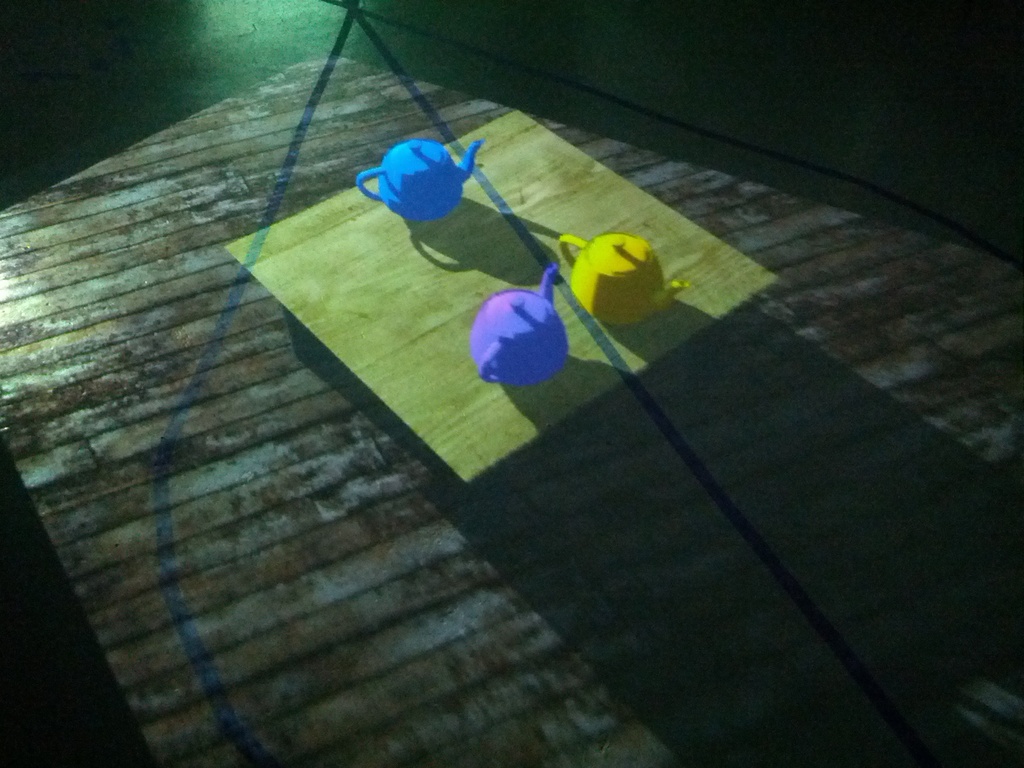

This second video has been taken from an external viewpoint. Its purpose is to let better understand what happens when the images are rendered according to the user's viewpoint. In other words, this shows the effect of head-coupled perspective.

Summary

Our system gives the illusion of 3D to a single user. A virtual scene is projected all around him on the floor with head-coupled perspective. The user can walk freely in the virtual world and interact with it. Multiple sensors are used in order to recover the head position. The estimation is provided by a Kalman filter. The selected sensors behave perfectly in total darkness, and the user does not need to wear anything. The sensors and projectors can be calibrated in less than 10 minutes. The projection is neither orthographic nor perspective. Our rendering method is accurate to the pixel because the images are rendered directly in the projector’s image plane.